Your cart is currently empty!

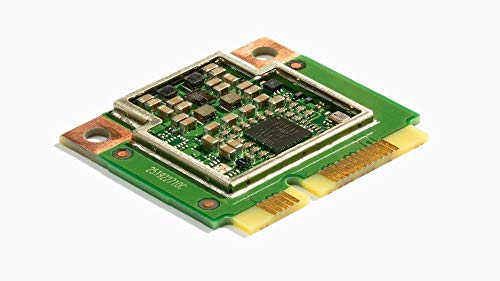

SOM System-On-Modules – SOM Google Edge TPU ML Compute Accelerator, Integrate The Edge TPU into Legacy and New Systems Using a Standard Half-Mini PCIe

Performs high-speed ML inferencing The on-board Edge TPU coprocessor is capable of performing 4 trillion operations (tera-operations) per second (TOPS), using 0.5 watts for each TOPS (2 TOPS per watt). For example, it can execute state-of-the-art mobile vision models such as MobileNet v2 at 400 FPS, in a power efficient manner. See more performance benchmarks. […]

Out of stock

Description

Performs high-speed ML inferencing

The on-board Edge TPU coprocessor is capable of performing 4 trillion operations (tera-operations) per second (TOPS), using 0.5 watts for each TOPS (2 TOPS per watt). For example, it can execute state-of-the-art mobile vision models such as MobileNet v2 at 400 FPS, in a power efficient manner. See more performance benchmarks.

Works with Debian Linux

Integrates with any Debian-based Linux system with a compatible card module slot.

Supports TensorFlow Lite

No need to build models from the ground up. TensorFlow Lite models can be compiled to run on the Edge TPU.

Supports AutoML Vision Edge

Easily build and deploy fast, high-accuracy custom image classification models to your device with AutoML Vision Edge.

Additional information

| Weight | 0.01 lbs |

|---|---|

| Dimensions | 0.41 × 5.82 × 9.7 in |

| sales_rank_subcategory | |

| reviews_rating | |

| reviews_count | |

| reviews_30d_avg | |

| reviews_90d_avg | |

| buy_box_current | |

| buy_box_30d_avg | |

| buy_box_90d_avg | |

| buy_box_stock | |

| buy_box_availability | |

| buy_box_is_fba | |

| new_offer_count_current | |

| new_offer_count_30d_avg | |

| new_offer_count_90d_avg | |

| url | |

| categories_root | |

| categories_sub | |

| categories_tree | Electronics Computers & Accessories Computer Components Single Board Computers |

| asin | |

| ean | |

| upc | |

| product_codes_partnumber | |

| brand | |

| model | |

| package_dimension_in | |

| package_length_in | |

| package_width_in | |

| package_height_in | |

| package_weight_lb | |

| item_dimension_in | |

| item_length_in | |

| item_width_in | |

| item_height_in | |

| prime_eligible |